Prioritising to use built-in functions in interpreted languages

We need to avoid as mush as possible the use of for loop in our algorithm by implementing vectorisation method and using built-in functions as much as possible.

In interpreted languages, such as Python and MATLAB, their “for loop” execution time is very slow compared to compiled languages such as C/C++. Hence, we need to avoid as mush as possible the use of for loop in our algorithm by implementing vectorisation method and using built-in functions as much as possible.

We have presented and discuss the vectorisation method in the previous post.

In this post, we will focus on presenting the reason on why built-in functions provided by the interpreted languages are very fast and should be used as much as possible.

The vectorisation method and the utilisation of built-in functions are very related each other. Because, in many situations, to be able to avoid for loop and to use built-in functions, we need to implement the vectorisation method to our algorithm.

Although, there are situations where we cannot vectorise our algorithms.

In addition, the reason why built-in functions (from the language itself or from a mature library for the language) are very fast compared to algorithms utilising for loop is also explained and discussed.

Let us start the discussion!

READ MORE: TUTORIAL: C/C++ programming with Qt framework, OpenCV and Eigen libraries for ellipse fitting from images

Algorithm complexity and performance comparisons

This topic is already well-known. Hence the purpose of this discussion is as a reminder and refreshment for those who already familiar with algorithm complexity and Big $O$ notation [1,2].

The main goal of algorithm complexity analysis is to be able to rationally compare algorithms in term of the order of growth and not in term of the execution time.

Because the execution time does not only depend on the complexity of the algorithm, but also depends on [2]:

- The efficiency of the algorithm implementation (is it vectorised or not? Is it utilising built-in functions or not?)

- The characteristic of the hardware and the programming language used. Compiled language (such as C/C++) is basically faster than interpreted languages (such as Python and MATLAB).

- The structure of the dataset to be processed (does the data sorted, partially sorted?)

- The size of the problem or the data.

For example, we have two types of algorithms. One algorithm requires $5000n+10$ steps and the other requires $10n^{2}+2n+1$ steps. $n$ is the number or the size of inputs.

If we compare the algorithm 1 with $5000n+10$ steps and algorithm 2 with$10n^{2}+2n+1$ steps, for $n=2$ (small size), algorithm 1 requires 10010 steps. Meanwhile, algorithm 2 requires 45 steps.

For this small input of $n$, it seems that algorithm 2 is much better than algorithm 1.

However, when we have input $n=1000$, then algorithm 1 requires 5000010 steps. Meanwhile, algorithm 2 requires 100002001 steps. Further increment of the $n$ will cause algorithm 2 much worse than algorithm 1.

Here, we can see that, the order of growth of algorithm 2 is higher than algorithm 1. Hence, algorithm 2 is more complex than algorithm 1.

The main reason is that, The first algorithm has a leading term of $5000n$ and the second one has $10n^{2}$.

The leading term of algorithm 2 (that is $n^{2}$ is higher than algorithm 1 (that is $n$).

Here, we can see that the size of the coefficient on the number of steps formula is not important or relevant. Because, there will always be $n$ where $c_{1} n^{2}>c_{2} n$.

This reason explains why built-in functions in a programming language usually has much better performance than those without built-in functions.

Built-in functions in a language (or from a mature library for the language) usually have a low order on the leading term compared to conventional for loop process (that is, smaller leading term). In addition, the built-in functions have good code implementations.

These two reasons cause the built-in functions have very fast execution time!

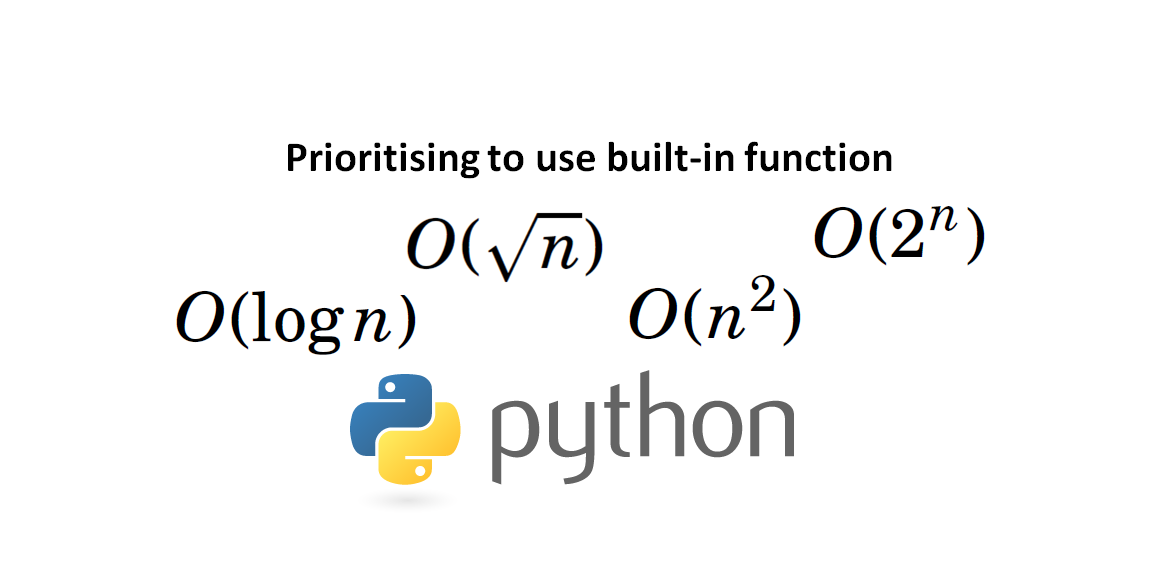

Algorithm complexity classes

Below is the class of algorithm complexity from the best to the worst (slower/complex). The worst algorithm means that it has larger leading term, is more complex and has higher order of growth with respect to input size.

The class of algorithm complexity are as follow:

- $O(1)$. Constant time. The algorithm does not depend on the size or number of inputs. This is the best algorithm performance.

- $O(\log n)$. Logarithmic. The algorithm steps are halved the input size at each step.

- $O(\sqrt{n})$. Square-root. This algorithm is better than $O(n)$. Because, it has a special property of $\sqrt{n}=n/ \sqrt{n}$.

- $O(n)$. Linear. The algorithm will calculate the inputs in a constant time of number (n times).

- $O(n \log n)$. Linearithmic. It is an indication that an algorithm sort the input or the algorithm require a data structure where it takes $O(\log n)$ for each operation on the data.

- $O(n^{2})$. Quadratic. The algorithm contains two nested loops.

- $O(n^{3})$. Cubic. The algorithm contains three nested loops.

- $O(2^{n})$. Exponential. The algorithm indicates that it goes through all subsets of the input elements.

- $O(n!)$. The algorithm indicates that it goes through all permutations of the input elements.

More detailed explanations of algorithm complexity and comparison can be found in [1,2].

READ MORE: TUTORIAL: C/C++ implementation of circular buffer for FIR filter and GNU plotting on Linux

- Python for Data Analysis: Data Wrangling with Pandas, Numpy, and Ipython – A book that provides comprehensive guides for manipulating, processing, cleaning, and crunching datasets in Python.

Execution time comparisons between using and NOT using built-in functions in Python

Now, we will discuss two, simple but clear, examples of the execution speed of built-in functions (from the language itself or from a mature library for the language). The first case is a summation calculation an the second one is the standard deviation calculation from data.

The case studies use Python programming language.

All the code below can be run in a single program file. But, the presentation is carried out per code segment.

1. Calculating a summation operation

The code segment below is used to import libraries and to set the parameter of the data. That is, in these two examples, we set $n=1000000$, that is our data contain 1000000 number of elements or values.

The central processing unit CPU execution time is calculated from the time the CPU used to finish the calculations. We use “time” library to calculate the CPU time of the calculations.

An array $arr$ is initialised to be a vector with n=1000000 elements and the value is between 0 and 1, equally spaced.

import time

import random

n=1000000

#Creating a vector with n element from 0 to 1

arr=np.linspace(0,1,n)

Next, we will then sum all the 1000000 elements of the array to get the total value. In the code segment below, we use a single for loop to iterate all the array element and add them one-by-one. The complexity of this algorithm is $O(n)$.

total_sum=0

for ii in range(n):

total_sum=total_sum+arr[ii]

total_time=(time.time()-start_time)

print("total time: ", total_time)

The total execution time used to sum all the array elements is 0.3201 s.

Next, we will perform the same computation but by using a built-in function “np.sum”. By using the built-in function, the code becomes compact.

total_sum=np.sum(arr)

total_time=(time.time()-start_time)

print("total time: ", total_time)

The total execution time for performing the same summation computation of the 1000000 elements array is 0.0009 s. This execution time is three magnitudes faster than the one without using the built-in function “np.sum”!

2. Calculating the standard deviation from data

In this example, the calculation of standard deviation is presented.

The code segment below shows the calculation of the standard deviation using two for loops that is not nested.

The first for loop is to calculate the average of the data. This average value is required to calculate the standard deviation that is performed in the second for loop. The number of steps of this algorithm is 2n from the two for loops that are not nested. Hence, the leading term is $n$ so that the algorithm complexity is $O(n)$.

total_sum=0

for ii in range(n):

total_sum=total_sum+arr[ii]

average=total_sum/n

total_sum2=0

for ii in range(n):

total_sum2=total_sum2+(arr[ii]-average)*(arr[ii]-average)

std=np.sqrt(total_sum2/(n-1))

total_time=(time.time()-start_time)

print("total time: ", total_time)

By implementing for loop to calculate the standard deviation, the total execution time is 1.272 s.

Next, the built-in function “np.std” is used to calculate the standard deviation. Again, with the built-in function, the code becomes compact.

std=np.std(arr)

total_time=(time.time()-start_time)

print("total time: ", total_time)

by using the built-in function to calculate the standard deviation, the total execution time 0.005 s. Again, this execution time is three magnitudes faster!

It is important that the built-in functions can only be used when our data is a type of vector! That is why, most of the time we need to vectorise our algorithm to be able to use the built-in functions.

READ MORE: Vectorising linear sequence search in MATLAB programming

Conclusion

In this post, the reason on why we need to use as much as possible all built-in functions (when possible) when using Python (and other languages, especially the interpreted one) to have the fastest possible execution time with the language, is explained.

However, to be able to use built-in functions, sometimes we need to vectorise our computation. There are cases, though, where we cannot vectorise our algorithm.

The well-known algorithm complexity and performance comparison are presented first as refreshment. The famous Big $O$ notation is also re-presented again.

Finally, some real examples of comparison of execution speed of computations between the one that uses built-in algorithm and the on that does not utilise the built-in functions.

From the comparison results, the execution time of computation using built-in functions can be faster up to three magnitudes than the computation without using the built-in algorithms.

References

[1] Downey, A., 2012. “Think python” O'Reilly Media, Inc.

You may find some interesting items by shopping here.